It was fantastic to see the recent work by the Southwest Research Institute and San Antonio Spurs into the validity and reliability of the Catapult OptimEye S5 (find it here if you haven't already read it). It adds to the growing list of papers that take a good look at the quality of the information coming from technology that we regularly take for granted.

Their results suggest that we need to pay closer attention to the basics around calibration and validation of the units. But is this something we should be expecting of ourselves? Or is it the responsibility of the technology provider? It probably should be expanded to all of our technology, so should that be tasked to an independent body, or does it need to be done in-house? In this case, it's probably a combination of in-house calibration in partnership with a semi-independent body like a university or research institute with a link to the professional sporting organisation, but there's more to this question than this one situation.

For me, it also raises a bigger question into how quality assurance can be incorporated into the modern day sport science service in a professional sporting organisation. With the small number of staff as well as the short turnarounds between games and seasons, quality assurance normally gets missed out and in my experience is rarely addressed. I've definitely been guilty of this, but when I've been able to step back a little from the frenetic day to day operations it's clear that a better approach to QA would not only have led to a better and more professional operation but it also would have made that day to day servicing easier.

To my mind, the National Sport Science Quality Assurance Program the AIS has run has been a cornerstone of the success of sport science within the network of institutes around Australia. I can remember the discussions we had around a biomechanics NSSQA module that went around in circles without much resolution and looking back I'm disappointed that, although I was very new to the institute system at the time, I didn't understand the benefits that good QA processes would provide and I didn't devote enough time and energy to make the NSSQA process work.

I now can see that good QA will not only help with the quality, accuracy and confidence in the information provided, but amongst other benefits will help with succession planning (critical in this industry given the high turnover of staff), accessing and understanding historical data, identifying areas where professional development will help staff, and help semi-skilled staff like the expanding number of trainees brought into pro environments to deliver a competent service.

Perhaps most importantly, it will save the most critical commodity in a pro organisation - time.

For sure, it will cost time to get it up and running to begin with. But that doesn't necessarily mean that you need to take time out from the service you are already providing. There are people out there who can help set these systems up for you. I for one have quality assurance as a part of my health check service (kind of like an audit to help clubs make the most of the resources available to them).

The bottom line for professional sporting organisations is that QA is important, it is not expensive, it will save you time, and it will make you more elite.

Never mind the bollocks - The fascinating case of using statistics to analyse Tiger Woods - Biomechanics and Performance Analysis, what capture and analysis will look like in 2024 but the best will be doing now - Why should you use a biomechanist? - Coaching Athletic Technique in the age of big data - Does a competitive advantage in elite sport come only from something new? - Jocks vs Nerds; Australian Summer edition... - The value of investing in knowledge; Identifying streakers and slumpers in Major League Baseball - Can sport science still provide a competitive advantage at the Olympics? - Two sides of different coins, avoiding a culture of rest in elite sport - RFID Player tracking in the NFL, predictions for the life cycle of a new technology - Feedback systems failing in the NFL? No surprises here! - Paul DePodesta and the NFL, the revolution that is aready here - Process versus outcome, the need for a voice that crosses over between coaching and support services - Harnessing technology in elite sport - Really? 100 wins in a MLB season isn't an advantage in winning a world series? Why not? - Is Sport Science the new Moneyball? - Analytics in Elite Sport - Augmented Video Feedback - Teaching Elite Skills - Scheduled Rest & Batting Averages in MLB - Combining Trackman and 3D Motion in Golf

We were born under a month apart, so for my entire adult life Tiger Woods has been a constant presence in the sporting world, and a constant source of fascination. For me, the fascination has been heightened by how he has tried to adapt his game to his body, who he has reached out to (including members of my own profession of biomechanics) and how that's helped or hindered his game. This year will clearly be no different, with the question of 'is he back?' being constantly asked and analysed.

For a veteran like Tiger, the mountain of statistics available in golf (freely available from the PGA tour website) lets you dive deep into historical data, looking for trends and breakpoints like points where injuries have occurred or his equipment has changed. You can very easily get lost mining away at the data, looking with increasing precision into elements of his game.

This is a feature of a lot of modern sports analytics, and I think it's mostly a waste of time. I've done this sort of work with a lot of different sports at the truly elite level, and the interconnections between the different stats render them to be more a source of idle interest rather than something that can be used to truly effect and measure performance in an applied setting.

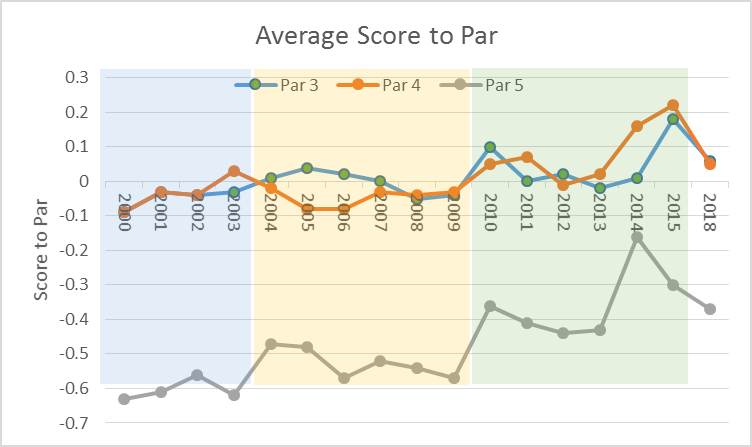

So, cutting through all the bollocks, here's the graph of what I see as the important bit.

In his heyday, his scoring was clearly driven by his average score to par on par 5s. There were years where he was really struggling for results and his par 3 and par 4 performance reflected those, but there were also years like 2012 (3 wins) and 2013 (5 wins) where he had success (including 3 top 10s at majors) where his par 4 performance was close to his average. This really just confirms the general commentariat view, that his success will be driven (pardon the pun) by his performance with longer clubs off the tee, but what it also does is provide a concrete focus that takes into account all the interplay of distance and accuracy and course management. You have a very specific yet still general set of standards to measure yourself by, and all the interventions you put in place can be brought back to and evaluated by these statistics.

The take home message from this is that there is always the temptation to delve deeper, and that temptation doesn't need to be avoided. But before going deeper with the next step, the best thing to do would be to go back to the broader view to make sure that going deeper is actually going to do something other than satisfy a curiosity.

So, how is this year shaping up? After two tournaments, the par 5 performance is ok, the par 4 and par 3 performance needs some work, which would suggest it's to do with the approach to and work around the green, an area that he has traditionally been quite stable in (given the long term par 3 performance). What I would expect to see as the season progresses and the rust is shaken off is that the work in to and around the green will improve with more competitions, and we could very well see the 2012/13 Tiger back again very soon. Are we going to see the 2007/08/09 Tiger back? Perhaps, but when you do delve a little deeper his driver club head speed so far is around 5mph down on those years so you'd expect his par 5 performance wouldn't improve enough to get to that level.

Still, fascinating to watch it all unfold.

I was recently asked to do a presentation at a Dartfish expo day - the day ended up being rescheduled and clashing with a trip to the UK so I wasn't able to do the presentation, but the process of getting the presentation ready was a really useful opportunity to reflect on a few aspects of where biomechanics and performance analysis is now and where it is likely to go in the future.

The talk itself ended up being very similar to a previous post, but I thought I'd share it here anyway. Plus, any excuse to use this youtube clip again;

I love this footage, and it's also a great way to look back at the setup required for high speed video and in-the-field analysis of technique in the late 70's. The high speed camera setup that the Biomechanics department at UWA set up here was complicated, required experts to set up and run, and took a long time to review the results. If we move to the present day, we can now get 1000 frames per second on a smartphone. Sony has been including 200 fps in their handicams since around 2005 (ish), and have really been leading the way in terms of pushing high speed capability into more consumer friendly models. Everyone has at least seen the high speed capability of iphone 6 and up, this is 240fps, and this has been beaten by the Sony Xperia XZ premium which provides 1000fps.

So where is this going in the future? Well it's just going to get easier to get great footage. Sony has also released the action cam package, which allows multiple camera setups that can give synchronised capture at 200fps, controlled from a portable unit that can be worn as a watch (that also allows for remote review of the video that's just been captured). There's also powered tripod mounts that can follow a player, giving isolated vision automatically.

Complicated setups that required specialist staff will steadily become a thing of the past. Consequently, teamwork within the capture stage will become even more important than now. Performance analysis staff will likely move to co-ordinating a semi-automated capture (or just using broadcast footage which is becoming more and more accessible) rather than doing the capture themselves, which means they need to work collaboratively rather than independently to get the footage that is needed. Freeing them up from just standing behind the camera should also leave plenty of time for another aspect - data capture of wearable monitors and 'smart' sporting equipment.

Sony has also released the smart tennis sensor - this is a monitor that sits on the end of the racquet handle and gives some really detailed metrics on performance. In the past this would be something that required extremely specialised staff (possibly from bespoke equipment and applications, which would almost certainly be prohibitively expensive). Now it's something that the athlete can do themselves, from installation of the sensor to capture to download. I haven't taken a close look at this sensor and don't know about how accurate it is and which metrics can be relied on (they may all be fine, but there might also be some dodgy ones as well), but it is at least an indicator of where the future lies.

A sensor I have done some testing with is the blast baseball sensor. Like the Sony tennis sensor it sits at the end of the bat and provides metrics on bat movement. There's also 'smart' soccer balls from Adidas, wearable sensors for basketball shooting, and of course the explosion in GPS systems.

There's also metrics on the outcome of the movement. The trackman system has been used for many years now, using radar tracking to follow the path of the ball not just in golf, but baseball, tennis, and also athletics in the hammer and shot put. These metrics are really, really important as they provide some real numbers on actual performance rather than inferred performance from technique - where you need to compare the athlete's technique to an ideal technique that is often not properly matched to the physical constraints on that athlete

Technique itself is also becoming easier to quantify. Systems like the k-vest are becoming user friendly enough for coaches to use themselves without the need for specialist biomechanics staff for the setup and operation. So overall the future of the capture element will become more automated, easier to set up and require less specialist staff. However, all this means is that the staff that in the past would have had to be hands on in the capture stage can perhaps be more hands off, co-ordinating the capture and helping everyone form athlete to coach to support staff to work as a team in getting the vision and data needed for the next stage, analysis.

The analysis stage is where quality staff and, perhaps more importantly, structure and teamwork within a team of service providers can really provide a competitive advantage. Elite support staff - smart, technically sound, experienced professionals, can take the raw data coming in from all these disparate sources and turn it into information that the coach and athlete can use to make good, informed decisions.

Here's one example of where the future could be headed. The blast baseball sensor I talked about earlier is a great example of the new breed of smart sensors, in that it can be installed and used in just about any situation, small enough to be used in normal training or competition (so the validity of the numbers is really high), the athlete can do the installation and capture themselves, video can be captured on a smartphone, and the application can automatically link the data and the video. So, on the surface it's an all in one solution. However, there are limitations and with a little extra information the results could be so much better. The first area where using the sensor just by itself falls down is that it's not an outcome based measure, and the interaction between metrics like bat speed, power, 'blast' factor and attack angle don't necessarily have global ideal values to use as a model, let alone an model that is individualised to an athlete's own physical strengths and constraints. What you really need is to pair it with an outcome based measure, like trackman, to identify what is a good number and a bad number for an individual. The outcome measure could also be as simple as categorising good and bad contact (as in subjective coach or athlete evaluations), but if you can add in some more advanced metrics like those that are available from trackman (if you have $30,000 to spare) or rapsodo (if you have $3000 to spare) then you can do some really nice data analysis to get more from the data.

How we have done this is to use a cluster analysis (a form of analysis that groups repetitions together in clusters of similar repetitions) to identify the metrics that occur when you have good and bad contact, so you can end up with an individualised performance model for each athlete. We've also done the cluster analysis just on the blast data rather than the outcome data, and this way we're able to identify the swings with high and low values in different metrics.

There is very rarely such a thing as a representative trial, one where the video from one repetition can be carbon copied to all similar outcomes. There will be elements of the action which vary from one trial to another that are important, and others that are irrelevant. There will also be actions that are important for one individual and irrelevant for another. Without context, analysis is fraught with danger (and there is massive potential for personal bias to colour the analysis). You should be trying to find ways to cut through any bias that the coach or athlete has when they are analysing the action, allowing interplay between the information available to help make good decisions and provide good feedback.

In the case of the system described here for baseball, we've also added in a k-vest to give us some kinematic metrics. This is making the system into something that can give a complete picture of what has happened, which can then be fed into some sort of advanced statistical analysis program (in our case the cluster analysis) to identify the movements that are causes and the movements that are effects, as well as the movements that are important and the movements that are irrelevant. Now you can adjust the feedback to suit the athlete, facilitating the feedback and growing understanding in the athlete.

I believe this sort of system is how the future of this sort of analysis is going to look. The elements are;

1. Video

2. Movement data (ie. kinematic data from the k-vest)

3. Smart sensor data

4. Outcome data (from systems like the trackman)

5. Advanced statistical analysis (in our case the cluster analysis) to identify key elements of the action that lead to the good or bad outcome

6. Something and someone to bring everything together for feedback, making all the elements work as a team to give good information that leads to good decisions.

So in a modern coaching world, where capture is easier and analysis needs to step up to the next level, you need expert support staff and a platform where all the disparate elements (both in human and data terms) can be brought together to work as a team to provide the right answers.

I once had a chance meeting with Aussie racing driver Mark Webber - one of the nicest, most generous and fiercely proud Australians there is. In that brief chat I had the chance to pitch a scenario where a group I was consulting to at the time could get some help from his current team, Red Bull Racing. To his great credit, given he probably gets hundreds of these proposals each year, he listened and suggested I follow up through a mutual friend. In the end we were able to make something happen - not a grand partnership but something really positive that definitely shaped the organisation I was consulting to over the next few years.

For me, this was a brilliant example that applies to a lot of situations in the elite environment. Time was limited, the concept was a little abstract, but the message needed to be delivered then and there. The proposal needed to be broad but have a very simple and targeted result - making the organisation I worked with and he cared about better, helping them win. The detail about how and when could come later.

The lessons learnt from this experience can maybe be used to solve another issue I've been thinking about recently. I put a call out over social media last week to get an idea of how many biomechanists there are working in elite sport who aren't also employed at an institute or university. The answer is… not many - me and only a couple of others.

I think this is a problem. The world of an academic sport scientist is very different from the world of an applied one, and there's precious few people I know that can effortlessly switch their mode of operation between the two. Where an institute based biomechanist is used, there are often issues with continuity of service, with testing tending towards a 'one and done' approach that often leads to a club being disappointed that a complex problem can't be fixed with a wave of a magic wand (albeit a magic calibration wand).

There's many barriers to getting more biomechanists into elite sporting organisations. Some are real issues but others are just the result of misguided perceptions. So, why should an elite sporting organisation to engage a biomechanist? Using my lessons learnt from past experiences, here are my reasons why.

A biomechanist in your organisation will help you win because everyone in your organisation will be better at what they do.

You will have better coaches, because they will be able to use data and advanced analysis to augment their own intuition - they won't have to guess where the root cause of a technique fault lies.

You will have better analysts, because combining video analysis with big data is what biomechanists are trained to do - they will be able to find new insights into technical and tactical performance.

You will have better scientists, because wearable sensors and other monitoring tools like force plates are our bread and butter - they will get more out of the technology you already have.

You will have better medical and rehabilitation staff, because analysing movement is what we do best.

Finally, it's not expensive - technology is cheap and when the field, the pitch or the court is your lab then any analysis is possible.

The beginning of the 21st century has seen a surge in the use of data in elite sport. From a greater reliance on performance based statistics to guide front office and tactical coaching decisions to the integration of wearable sensors that can enhance the physical preparation of athletes, the role of data in shaping the day to day activities in an elite sporting organization has dramatically expanded. However one area that has lagged behind somewhat is the use of data in enhancing an athlete's technique.

There are many reasons for the slow integration of data-driven analysis into coaching practice. Even though the technology used to quantify technique has been available for many years, the nature of the analysis requires a complicated set-up that is often perceived to be only available in a laboratory setting. There is also resistance from coaches and athletes who have traditionally provided a feel-based analysis, valuing qualitative assessment over a quantitative analysis.

These are good reasons for not rushing into using data to drive technique analysis. It is true that the set-ups are complicated, though they don't necessarily need to be. It is also true that a feel-based qualitative assessment often offers more than a data driven quantitative assessment, though there is great scope in combining the two, enhancing the coach's eye through a data driven analysis.

When you look at the technology that is both succeeding and creating excitement in coaching circles, it's generally a piece of technology that is simple yet allows for coaches to interpret the results and integrate that information into their qualitative analysis. This type of technology provides some decent quantitative information about the quality of a technique, but still allows the coaches to interpret the results and guide the athlete in how to achieve a better result through their own coaching 'feel'.

Though a brilliant step in the evolution of coaching, these methods and sensors can still be greatly improved upon without compromising on the elements that make it work. Firstly, the data is a fair way from being comprehensive, generally providing information on only one element of a technique. There is no guarantee that an improvement in results measured by the sensor will lead to an improved performance. Secondly, the reliance on one-off results (ie. looking at each effort individually rather than in a group of 'good' and 'bad' attempts) can add to the confusion rather than reduce it. This is also affected by the weak connection between improvements in the data and real performance improvements.

Three steps can be taken to improve these systems; add more lightweight sensors to increase the amount of information on a technique without impacting on the practicality of the overall system, incorporate real performance measures (or information on how successful the attempt was), and use machine learning to drive the analysis thereby reducing the complexity of the results.

A good way to integrate more sensors is to find an off-the-shelf system and adapt it to the specific situation (as described in a previous post). Often there is a ready-made answer that just requires some know-how to make it effective.

Finding performance measures is also not a difficult task. This can range from a simple good vs bad assessment by a coach to a more thorough analysis of results (like with a trackman or other similar radar system for throwing and hitting sports).

Finally, machine learning techniques can be used to identify elements of the data that are different between good and bad outcomes. A cluster analysis on the performance data can group similar attempts. When the group average results are generated, the elements of the technique that are clearly different between the good and bad groups can be easily identified, allowing the coach to clearly see what the underlying difference is between good and bad technique for that individual athlete.

At the end of this process it's time for the coach to take charge and use their years of knowledge and experience to guide the athlete in how to fix the fault identified. In this way, big data has provided the coach with an insight into what the underlying technique fault, but has left them to provide the solution for how to fix the problem. Machine learning has provided the guide for the coach but has not discounted their own feel and qualitative assessments in finding a solution to make an athlete better.

Wearable and miniaturised technology is providing some fantastic advances in the way data is used in elite sport. A whole range of new metrics are providing scientists and coaches with valuable information on how to minimise injury risk and maximise performance. But there are still definite gains to be made from adapting existing technology to new situations.

I have three projects on the go at the moment that are extending the use of existing technology. The main one is the stride variability toolkit for GPS units that is nearing release, but another one I'm really excited about is this system, that provides baseball coaches and athletes with really detailed information on their swing (and hopefully pitching) mechanics.

Exciting new project - adapting a motion analysis system for baseball, using machine learning to analyse data. Bringing the lab to the field

pic.twitter.com/DGsSpmoBdW— Alec Buttfield (@alecbuttfield) January 13, 2017

This isn't a system that was developed for baseball, it's actually a golf system, and the fact that it is a golf system has massive benefits for sports like baseball. The main benefits in using a system designed for golf coaches is that the information is rich and detailed but the technology itself is really practical and easy to use.

There is a lot of new technology coming onto the market that analyses baseball mechanics, like the peak motus load monitoring tool for pitchers, and the blast sensor for hitting . But these technologies don't offer the depth of information provided by 3D motion analysis data. If coaches and athletes really want to get an in-depth analysis of their mechanics then a system like the one we've developed is way to go. It's more complex data (though the analysis is made simpler using the machine learning tools we've integrated into the analysis, similar to this project), but baseball isn't a sport to shy away from complex stats and data to get a competitive advantage.

In this and many other cases, it is the intelligent use of existing technology that has provided a rich source of new information. The competitive advantage that can be gained from this approach is magnified by the fact that new technology is generally available to everyone, whereas adapting existing technology is dependent on the knowledge and skills of the people within (or consulting to) an organisation, allowing benefits and advantages to be kept in-house.

In essence new technology provides an advantage available to all, distilling the advantage for an individual organisation, whereas adapting existing technology provides an advantage to the few organisations that have invested in the knowledge and experience required to adapt the technology.

If you're interested in the systems described here, drop us a line.

With the Australian summer of cricket comes the inevitable discussions on injuries in the Australian squad, along with the finger pointing and apportioning of blame, generally directed towards what is felt to be an unnecessary intrusion by sport scientists.

In this post-truth world where experts are often derided, their status relegated to the level of "expert" or, even worse, "so called expert", is there still room for reasoned debate on this and similar issues in other sports (such as athletes 'resting' in the NBA, or rotating players in an English Premier League squad)? Or is this jocks vs nerds battle eternally doomed to partisan bickering.

Taking a step back, isn't it possible that both groups of experts are right? The jocks who have a profound understanding of the physical requirements of being an elite athlete as it relates to their own personal experiences, and the nerds who have an acute understanding of the data and current research into how best to prepare athletes for the rigours of competition, could both groups be right at the same time?

From the nerds side of the argument, there's a lot of really good data backing up their approach. There's a lot of research been done into fast bowling workload patterns as a risk factor for injury (link #1), and that spikes in workload are associated with increased injury risk (link #2) ,(link #3). Research is continuing into, among other areas, better ways to measure (link #4) and prescribe workload (link #5) as well as continual refinement of risk factors for injury (link #6).

From the jocks side, there's also valid arguments. It might seem unscientific to say 'back in my day', but that's still a case study of an individual who competed at the highest level without having any restrictions placed on their training or game activity, and possibly performed better when they did more work rather than less. These are experts in how they both maximised their performance and their health seemingly without any 'unwelcome' intrusions.

We might need to get an even broader view of the situation to understand it some more. The nerds know that in a study on junior fast bowlers around 14 years of age, 25% of them sustained an overuse type injury with the amount of rest between bowling days being a significant risk factor to injury (link #7). Though setting workload measures for adolescent bowlers at or around the level identified in this study would not reduce injury risk and could possibly be detrimental to the performance of 75% of the subject group, it would be morally and ethically unconscionable for a national body to allow activities that they know will expose up to a quarter of their junior athletes to serious injury.

The thing is, the jocks are in the percentage of athletes who got through their junior years without a career ending injury, and that workload restrictions may have been counter-productive to their future resilience as their careers progressed. So their expert opinion is absolutely correct when they say, in their personal experience, restrictions are to blame for a lack of resilience in the current crop. However, we cannot expect the national body to have vague best practice guidelines for juniors when there is such a clear risk of serious injury.

From the outside there is still so much that's not known about this problem. It is entirely possible that through ensuring that juniors with bodies that are unsuitable to the rigours of fast bowling have the best chance to get through their early careers without injury, they've just been set up for failure later in life. If this is the case then athletes with underlying flaws which will eventually restrict their capabilities at the elite level are perhaps filling up the limited positions in junior high performance pathway programs, squeezing the more robust athletes out of the system (particularly given the explosiveness of the fast bowling action).

But the answers to this and other similar questions come through more knowledge, more understanding, more science, not less. More co-operation, understanding and crucially leadership from the head jocks, nerds and (previously unmentioned) pointyheads is absolutely necessary to get the best results.

In an age where there are rapid technological advances in and around sport science, especially wearable technologies, it's difficult not to be seduced by everything new and shiny. However, a competitive advantage can be gained through a more thorough analysis of existing information. What's more, this advantage will not be available to the next club who buys the technology. This would take an adjustment in many organisations to deliberately invest in knowledge and creative thought.

As an example of re-examining existing stats, I wrote a post last year about what seemed an interesting trend in the variability of the pitcher vs batter contest in Major League Baseball. It looked like there was a fairly consistent shift to favour the batter around the time of the all-star break. This was particularly interesting as the advantage seemed to begin before the break and continue after the regular season had re-commenced.

The trend wasn't evident in the 2015 numbers, so it will be interesting to see if it re-appears this year. However, the same process can be used to look at just the offensive performance and its variability over the season. In short, you can work out a stat like OPS (on-base average plus slugging percentage) over small periods of time during the season (say, 15 days), then look at how varied the results are over the course of the season.

What's the point? Well, it might offer some really valuable information on what drives an athlete's peak performance. Are they an athlete who has a better season when they have more streaks? This would be characterised by better season long performance with higher within-season variability. Or are they an athlete who has better seasons when they are more consistent? The first sort of athlete could be described as a one who has a baseline performance and have periods of supra-maximal performance during the year (ie. streaks), and the other could be described as an athlete who has periods of sub-maximal performance from their baseline level (ie. slumps).

When you look at players over multiple years, it might be that the 'streakers' have their best years with high variability, whereas the 'slumpers' have their best years with low variability.

Again, so what? Well, perhaps this information can be used to nudge athletes closer to their preferred state, in that an athlete who performs better with high variability should be provided that in their training stimulation, whereas an athlete who does better with lower variability might be better off sticking to set routines.

These data might also be useful in identifying 'career years' and helping to predict whether the previous year was just an anomaly or a true reflection of what could be a sustainable shift in performance. Perhaps if expanded to a squad you could make better judgements about where to invest and bolster the squad. For example, if the team had a good year at the plate but it was suggested that it wasn't a sustainable improvement then it probably wouldn't be prudent to invest heavily in pitching in the expectation that the offensive production would be repeated in the next year.

Now, I've not spent too much time on this so it's just some superficial analyses and a bit of speculation. But what it all comes down to is creatively using existing statistics and analyses, as well as placing performances into the context where they occurred, to find new information. I believe that if an organisation that can re-evaluate its investment to prioritise knowledge and intelligence over new and shiny technology they will very quickly develop a competitive edge over their rivals.

The Olympics are a hard business. To win any colour of medal you have to be right at the top of your game. You don't need to be lucky to win, but it's very rare that you can overcome any bad luck, so fine are the margins.

What's become clear in the last few Olympics is that the best strategy for coming out on top is not only finding a way to get athletes competing at their best, but to make sure you give them the best opportunity to compete at multiple Olympic Games.

So are we simply relying on talented athletes to come along to find success? Yes and no.

You clearly need talented athletes, and this has been the case forever. However, any competitive advantage in the preparation of athletes that any country has in any sport (in particular in the sport science support) is now very small at best. What this has created is a situation where world class sport science support is now just levelling the playing field to the other nations who are providing the same quality of support. What perhaps a few years ago would provide a significant edge is now just getting your athletes to the start line with an even chance of success.

This does not mean there is no area where a potential competitive advantage is left on the table. What I see now is that physiology, S&C and performance analysis are generally being done at a truly elite level across the world. Skill acquisition and biomechanics is being done well but not consistently, so there are some potential gains there (in particular in the integration of these disciplines into coaching practice). Where the biggest advantages are is in the co-ordination of all service providers and coaches into a team that works as a well-oiled machine, with personalities and egos gelling seamlessly.

I personally would like to see more lead scientists being drawn from disciplines other than physiology or S&C, to perhaps shift the training focus away from minute aspects of physical preparation to a more holistic preparation that has a greater focus on performance under the pressure of competition. An added advantage of having a lead in disciplines other than physiology or S&C is that those disciplines will pretty much always be accessed by a coach, and a lead in a different discipline will encourage those concepts and ideas to be integrated into the preparation.

But after all the planning, effort, planning and preparation, this is the Olympics. A win means you are truly the best competitor, and after a loss there's nothing more to say than congratulations, there's nothing more I could have done, you're better than me.

I'm just putting the finishing touches on my PhD, it's been a big couple of months of writing. We've found some nice associations between the variability of within-step accelerometer data (taken from real gameplay data captured with Catapult GPS units) and injury. There are also nice associations that suggest a good deal of power to predict how fresh an athlete is. There are two approaches to using this information, and for that matter all athlete tracking and 'wellness' metrics. You can use it to understand the risks and potential consequences of exposing an athlete to a physical load, or you can use it to justify risk minimisation and reducing workload. They are two sides of the same coin, but very different mentalities.

I heard a commentator during an NBA game (I think it was Jeff Van Gundy) describe the prevailing attitude within clubs at the moment as a 'culture of rest'. Rio Ferdinand last week described it as a culture of over-protection. Are these growing pains of sport science being integrated into sports that haven't really been exposed to it before or legitimate concerns? Probably a bit of both, but my experience in working in both mature and emerging sport science environments is that there are a lot of examples where data is misused if not abused in the name of minimising risk.

What I've also learnt is that I wouldn't begrudge anyone for going down the risk minimisation path. When your job is on the line and one of your KPIs is keeping players uninjured, perhaps it's better to describe the decision on how to interpret data as not two sides of the same coin but two sides of different coins. Are applied sport scientists paid to maximise performance or minimise injury. Of course they're paid for both, but one is much easier to measure than the other. You can clearly demonstrate a return on investment in keeping players on the pitch, but it's difficult to say how much you've maximised performance and returned the investment placed in you by the club as you've got nothing to compare it to.

In the end, elite sport is about being brave. Normally you have to risk it to win it. Where I hope the metrics I've created for my PhD are used is to help practitioners back themselves, be brave, and tip the balance to maximising performance.

keywords; Technology

Aaron Tilley from Forbes wrote an article recently about the new frontier of player tracking in the NFL (How RFID Chips Are Changing The NFL). Zebra Technology is behind it all, and their VP & GM of Location Services, Jill Stelfox, was quoted in the article saying;

There's no doubt we're in the early stages of how this technology is used. But if this is only the first quarter, what does the second, third and fourth quarter look like? What will we be able to do with all this new information? I'll get my crystal ball out and have a go at some predictions.

In the second quarter the goal will be to take the metrics we are already using and transition them to the new measurement tool, as well as integrating those game day metrics into the overall load monitoring system. There'll certainly be holes in the athlete load data that can be filled with these new measurement systems, but the trick will be how well all systems are brought together to give a complete picture (and whether these metrics are used to top up the old metrics, or the other way around). How successfully this is done will be determined by the skill of the people involved at each club, as it's often not as simple as it sounds (especially given the differences in systems, do the metrics combine well or does an 'x' in one system equal a 'y' in another system).

There will also be the development of new metrics in the second quarter. Some analyses will suddenly be valid due to the increased resolution. For instance, with more precise position data, you may be able to accurately measure small accelerations. This opens up massive possibilities. Instead of relying on velocity and broad accelerations (ie. low precision accelerations that can only be broken down into a few bands like medium and high accelerations, generally with fixed thresholds), we can start to look at fine accelerations and perhaps break the accelerations down into more precise bands (ie. get more information on the actual intensity of the acceleration) and use rolling thresholds so that an acceleration at a starting speed of 5m/s isn't treated in the same way as an acceleration at 1m/s.

This is something I tried to do about a decade ago in analysis packages I was providing to professional teams who were early adopters of GPS, but the resolution of the accelerations wasn't good enough. The rolling threshold did work if you were just looking for a 'medium' and 'high' acceleration, but that was just confusing to the users who were trying to match up the values with the metrics provided by the manufacturers of the hardware, so it was largely mothballed till a more appropriate time (which looks like it could be now). Keep an eye out for the energy expenditure research that's published in the next little while and consider how a high precision acceleration can enhance the metrics described.

The development of new metrics will clearly include tactical and gameplay analysis. I think this will be slower to develop, as it's likely to be done on a case by case basis until a coach finds something that really resonates with them. For instance, if there's a question about what makes player x successful, then you could perhaps look at separation distances from an opponent, or separation from teammates in defensive and offensive formations, or speed reached during a play and the chance of success (which could also marry in with the physical conditioning staff to determine when a player might have reached their effectiveness threshold). It will likely be quite difficult to latch onto a value that can be applied across the board, so there's probably going to be quite a bit of trial and error before something works.

In the short term, integrating the position data with vision will give everyone some technical eye candy to get excited about. This sort of feedback could influence decisions by nudging thoughts in a particular direction, but also has the potential to be a distraction. The feedback tools developed need to be handled carefully to ensure that the focus is still on performance outcomes and not on the technology.

If the second quarter is about starting to get creative with the possibilities opened up by the new technology, the third quarter will be about taking the focus back to performance. The metrics that were consolidated in the previous stage will become routine, and the new variables that have been developed will be tested out and eventually become part of the day to day analysis of performance. Metrics that were promising but didn't provide a performance return for the time invested will be shelved, and other metrics that make a real difference to the operation will be adopted.

Tactical and gameplay analyses that make a real difference will become clearer, and will likely be adopted by coaches and performance analysts. This will follow trends as staff move from one team to another and the supposedly secret internal practices become widely known. Ideas that provide a competitive advantage won't stay private for long, so teams need to make the most of them in the short term.

However, there are major advantages to be gained in the ideas transfer market. If an organisation can be considered ahead of the game, the perception the rest of the competition develops that they are inferior in an area can transfer to athletic performance. If a perception of superiority in an area can be cultivated, this can have real performance outcomes (both enhanced performance for one team and diminished performance for the other). One way to do this would be to form an innovations group within the organisation that is secretive about their business (ie. their operation wouldn't be something that a coach transferring from team to team would know much about) but at the same time keeps producing new and seemingly innovative applications.

By the fourth quarter, the technology is adopted across the board and transfer of staff means that everyone is pretty much doing the same thing. There is generally no competitive advantage in using it, but a real competitive disadvantage in not using it.

Perhaps one example of a technology currently in the fourth quarter is video analysis. Everyone does it, generally using the same analysis tools in the same way. There is probably scope to take the analysis tool back to the third quarter by introducing new elements, but there's great resistance as it would be a time consuming process and the performance outcome is at best unknown. If this can be outsourced to an innovations group within the organisation then it's more likely to lead to a positive outcome, but if left to the day to day operation then it will become an essential tool but only the best operators will be able to give your team an advantage.

The life of this new technology definitely has a long way to run. New analyses that haven't been considered yet will become normal, and old analyses that have been ignored for various reasons will suddenly become crucial. Along the way there are ways of cultivating a competitive advantage over and above the performance outcomes you get from a straightforward analysis of the data. The real winners will be the ones who get out in front and seek out the good operators who can help generate the advantage for their team.

keywords; Performance Analysis, Technology

I think it was Chris White from the English Institute of Sport who started it, around the end of 2009 maybe. He was walking around the pits at a track cycling world cup with an iPhone and coaches were looking at a video of a race that had just happened. Of course, all the other coaches immediately wanted it as well, and as soon as possible after the race! So, that's how I began my second stint travelling to world cups, world champs, and eventually the 2012 Olympics with Cycling Australia, strangling every bit of performance I could out of the best technology a limited budget could buy!

The first stint was way back before the Athens Olympics, working with Scott Gardner to set up the performance analysis system that became the backbone of the race review and analysis system that's lasted for over a decade through massive technology changes (a testament in part to the quality of the original Dartfish software package and all the upgrades they've provided). There weren't many of us then, but if you're ever at a major track cycling event now just have a look at the row of performance analysts all frantically trying to get the crucial information that you can get from an immediate review of performance down to the coaches in the pits - it's come a long way.

But in some respects, nothing's changed. The news that the feedback system at NFL games frequently fails isn't particularly surprising given the technical challenges involved. It all appears so easy - video is recorded, video appears on tablet, happy days. But the reality is so different. Without a high quality Performance Analyst on board, the failures are going to be frequent and frustrating. Where the good operator comes into their own is not just the ability to stop the main system failing (it's likely to do that at precisely the wrong moment, just look at the AFC championship game) but how they've set up their contingencies (printouts of defensive formations anyone?).

I've got some great memories from both stints working with CA. From my first trip in 2003 to Aguascalientes in Mexico where I was standing on a rickety and hastily constructed grandstand transmitting my laptop screen to the coach, Martin Barras, who was walking around the pits with some virtual reality goggles on to view the previous race (could have done it more simply but that wouldn't have had the same effect), to the times when everyone's network went down at once leaving a bunch of performance analysts scrambling and coaches complaining that it was only our network that was down and everyone else seemed to be running fine (normally, this would go in a circle with Australia pointing at GB saying why was GB still working, GB pointing at France saying France was still working, and France pointing at Australia asking why Australia were still going). It really was wringing the neck of the technology that wasn't meant to be used in these situations to get the systems working, but that's working with elite sport in the field requires.

Ps. I've mentioned Chris, but should also mention the great work done by Kat Phillips (New Zealand), Benjamin Maze (France), Albert Smit (Netherlands), Will Forbes (GB), and of course Tammie Ebert (Australia) and Emma Barton (Australia then, now GB). Sorry to anyone I've missed. Good times!

keywords; Analytics

In case you missed it, Paul DePodesta has been hired by the Cleveland Browns as their Chief Strategy Officer. If you need some background to who Paul DePodesta is and what a Chief Strategy Officer does at an NFL club, google it and you'll soon find out (it's worth doing).

The interesting bit about roaming the internet for commentary on this hire is the range of views being expressed. There seems to be more positive than negative opinions, but there also seems to be a lot of 'let's wait and see' (which I'm sure will turn into 'I told you so' as soon as it booms or busts).

What is also interesting is that a lot of commentators are waking up to the possibilities being presented by the range of new metrics being introduced into the NFL, like the positional information being provided by Zebra technologies, and the player load information being provided by Catapult. There is a growing realisation that these metrics could add so much more to the analysis of an athlete's performance, and if they were combined….wow!

Here's the newsflash, the future is already here, and has been for a while.

I know that for years now I've been working in pro clubs with coaches and other sport science professionals to write and develop all sorts of analytics software packages that combine where a player is on the field with physiological metrics, other tactical metrics and vision. I'm sure I wouldn't be the only one.

There are a lot of crucial elements to making this work, but here are just two of them. Firstly, the info being provided by Catapult, Zebra and the like is the starting point, not the finishing point. Creatively using the raw information takes a really good knowledge of both what's being measured and what the question is that needs to be answered. Which leads on to the second point. Working with the coaching staff to frame the question is vital, otherwise you're just analysing numbers for your own interest without a real performance goal for the team.

Hiring Paul isn't a revolution, it's a natural progression. In my view, his success will be down to his ability to bring everyone in the organisation on board to make use of all the potential created by the availability of new metrics, as well as the ability of the people around him to create amazing insights from the raw materials available to them.

keywords; Elite Organisations

We had just finished an important competition, the coaching and support staff were having a meal and a quick debrief in a local restaurant. The coaches were asked what their best performance of the meet was (meaning athletic performance). One of the coaches didn't nominate an athletic performance but singled me out for the job I'd done as performance analyst as the performance of the meet.

This annoyed me.

The reason it annoyed me was that the only reason my performance at this meet was seen as better than all the other ones we had been to previously was that the technical setup in the arena allowed me to get the information to the coach faster. The outcome he wanted was easier to achieve, so in effect my professional performance was probably not as good as other competitions where I had to scramble the whole time to push the technology as hard as I could to get the outcomes the coaches wanted. By singling out this competition the coach showed they didn't understand the process behind the result.

But it was nice to be recognised.

In our trade, outcome is king. We may talk about process all the time, but without results it won't be long till there's a door hitting your backside on the way out.

It's not an environment that lends itself to stability, but more often than not it's stability that drives success. So how do you make stability in the process more important when outcome is valued so much?

There are a number of ways this can be done, but in my view I think the most effective is to have someone in the organisation who straddles coaching and support services, a voice in both camps that helps both areas understand the process the other is undertaking. 99% of the time in sport if you're not in the room then your opinion isn't even considered, so having someone in the coaching room who is fully up to date and understands the processes of the support staff (and speaks their language, literally and metaphorically) can provide a massive advantage.

Why isn't this the role of the head coach? He probably doesn't have the knowledge of sport science to completely understand what the service providers are doing (as long as they provide the outcomes he wants, he doesn't care).

Why isn't this the role of the support services lead, or head of sport science (or high performance manager, or whatever that role is called in your organisation)? They really need to be ensuring their own areas are functioning as well as they can, and if disagreements arise around the direction of the organisation they need to act as advocates for their own areas.

Why isn't this the role of the department manager? That role is often around higher level direction and personnel management rather than the technical aspects of the department.

I think there is a definite need for a voice that can help broker a compromise between coaching and support services (as long as the overall direction as dictated by the head coach isn't compromised), and bring the focus back to process rather than simple outcomes.

Outcome will still be king, but a voice that crosses between coaching and support services can help drive stability in the process through understanding.

keywords; sport science - analytics - technology

Elite sport has always been prone to jumping on the bandwagon of a new technology, which is not surprising given that if you're not moving forward then your opposition is passing you. I crunched some numbers for a sport I was working with after one Olympic Games, and just on the trend from previous 4 year cycles our physical performances needed to improve by 2.5% just to keep up. More importantly, even though we were very competitive at the time, we would need to improve by 5 to 7% if we wanted to win. Some improvement would come from the natural progression of the athletes in the program, but there is also the boost that improvements in technology can provide.

However, it's not always the shiny and new that you need to focus on.

A lot of what we do has gradually been refined over many years. Take video analysis for tactical and gameplay purposes. Sportscode and other video editing tools are now standard issue. They're gradually improving, but are definitely not in the 'new' category. However, revisiting these tools and examining how we can get more out of them is rarely done. We've now got a lot more information available on the physical performances of athletes to drive a deeper understanding of the video analysis, and some groups I've worked with are starting to sink their teeth into integrating some of these data. Taking some time to give yourself a bit of perspective can lead to older technologies and ideas being harnessed to provide a bigger boost to performance than the same time spent on 'new' technologies could.

In other areas, the best way to harness an improvement to an old technology is to change your approach.

Let's take a simple 2d technique analysis as an example. In very short time we've gone from big & bulky cameras providing good but not excellent vision to phones that can shoot bursts of high speed vision. This is a hugely under-utilised coaching tool, but at the same time it's led to a shift in the way a technique analysis is done. Before, the biomechanist would have been an integral part of the equation as they would provide the vision and help the coach gain insight into the positives and negatives of the technique. The new technology has led to the scientist and sometimes coach being sidelined. Is this a problem? Some, including a card carrying biomechanist like me, would say no (maybe). The problem comes when the analysis is flawed - for instance, if the ideal technique the athlete is trying to move towards is mis-understood or just wrong for that athlete, or if the analysis is focussed on outcomes rather than causes. To make the most of the new technology, you need to change from service provider to educator, helping coach and athlete to maximise the hugely powerful information that the new technology has unlocked. You also need to manage the technology, helping to create the longitudinal record of progress that tracks whether coaching interventions are actually effective. Plus, the traditional biomechanical analysis still has a massive role to play in getting the best outcome for an athlete.

Having said all that, incorporating the best new equipment into your program can provide an immediate benefit. What's really great is when you can take a new technology, like the wearable sensors provided by Catapult, and with a bit of thinking and some neat analysis extend their use. This is where I'm working with a lot of groups, and it's providing some really exciting results...

keywords; sport science - analytics

I heard an interesting bit of trivia during the MLB playoffs this week, teams who have won at least 100 games don't have a great record in the playoffs. That didn't make a lot of sense, this is supposed to be the sport where the best team won't win every game but will win more in the long run.

After a bit of digging, it's actually worse than it looks at first glance. Since the Division Series was introduced in 1995, there have been 22 teams who have won at least 100 games, 12 of them were eliminated in the Division Series, 4 lost in the League Championship Series, 5 lost in the World Series and only 1 won the whole thing (the 114 win '98 Yankees).

What it kind of suggests is that regular season record is at best very little influence on postseason success. Is it a different game? There's a lot of commentary in a lot of different sports about game styles that succeed in the playoffs or finals or cup games or crucial games of any sort. But after seeing athletes perform in pressure situations up close, I think it's more to do with the individual athletes who can mentally cope with the situation. Some rise to the occasion, others are handcuffed by the pressure, and until the moment arrives it's hard to know how the athlete will react. So in this sense, the team that gets you to the playoffs might not be the best team to win them.

This perhaps gets to one of the points where the new clashes with the old. It's insanely difficult to place at-bats or any stat in any sport into context. How do you identify the best pressure performers with stats that can win over the coach who goes with his gut. I remember discussing an athlete's performance with a coach at a major event, and what it all came down to was "he's in, he's a winner".

So how do you make your team better? You listen, interpret, decode and disrupt. If a coach says "I think batting average with runners in scoring position is crucial", what they probably mean is that they value athletes who make the most of opportunities presented to them. Coming back and saying average with RISP doesn't make any difference will just create conflict. Decoding and saying "that's not what you want, what you really want is …" will move the conversation forward. Disrupting by saying "let's do something different to everyone else that makes the guys we already have better" will win the ultimate prize, whatever sport you're in.

keywords; sport science - analytics

The Washington Post called it, keeping players healthy is Moneyball version 2.0. The paradigm shift in how clubs value their players is being repeated in the athletic conditioning realm. But has the real revolution arrived or is it still on the horizon?

There's no doubt that pro teams look at wearable technologies as a way to maximise their investments in athletic talent. As a return on investment, this is an easy sell. You can demonstrate that keeping a player healthy has a real monetary value. But it's not a new phenomenon. It's what medical staff and athletic trainers have been doing throughout the history of pro sport.

Wearable technology and other advanced metrics have definitely given teams a boost in their ability to medically manage their roster. In reality, this is no different to the continual evolution of medical services with new technology and methods constantly being integrated into pro setups. The co-ordination of how trainers, medical staff, coaches and front office interact to maximise the effect of these new technologies is a great step forward, but it's also potentially a massive obstacle if the organization isn't all on the same page.

But in most pro sports, the real revolution is yet to come. You can see it starting here and there, some leagues are more advanced than others. It will look a bit different to anyone who hasn't been paying attention, maybe a coach who has an assistant who doesn't fit the normal mould. The competitive advantage won't come from any specific technology, it will come from the organization working together, using sport science to make everything they do from medical management to athlete conditioning to, most importantly, coaching, better.

That will be Moneyball v2.0.

I've been fortunate to work with a few teams and organizations who have worked this out, and the results far outweigh the sum of the parts. Getting it right by looking at the bigger picture, like with a health check from BioAlchemy, can unlock the massive gains that are available.

keywords; analytics

I spent last Friday at the PSCL sports analytics conference. It was great to get some different views on a few topics, but the take home message (my take home message at least) didn't really emerge until I sat back and digested all the presentations.

Analytics itself is a funny term, a catch all for everything to do with collecting, managing and getting information from data. There's such a voracious appetite for information in the modern world that we're always asking for more of it, with better analyses provided in as close to real time as possible. Even the casual fan has a hunger for more titbits of information, and as the business of sport grows the 'analytics' of sport are straining to keep up.

I've often asked what is 'analytics' in sport, and my answer now is that your view depends on where you're standing. The areas covered at the conference demonstrate this - here's a list taken from their website:

Even if you take that list and just look at the elite sport environment that I'm most familiar with, analytics could include the data collection, management & analysis in statistical trends, player solutions, predictive analysis, and of course professional clubs & franchises. Break it down even further, just the player solutions would include (among other things) conditioning, wellness, performance enhancement and evaluations for list management. As an example, ESPN's feature on the great analytics rankings mash together what I would see as distinctly different goals of analysis for acquiring or trading players and analysis for enhancing the performance of players you already have in the system.

So how do you know if your organisation is winning the analytics battle? My view is that it's exactly the same as how the best applied sport scientists operate - work hard to do the fundamentals well and you'll be in the lead soon enough.

Good analytics means fast analysis. A lot of the time my service to clubs revolves around streamlining the data capture, management and analysis process. Time wasted manipulating the information to get it to a useful form is time lost in doing the thing that you've generally been hired to do - make insightful analyses on the information available.

Good analytics means thorough analysis. I see a lot of very superficial analysis going on in elite sport, focussing on the outcome rather than the root cause. Mostly this is as a result of time pressure, in that time wasn't taken when the question was asked to make sure that first of all the question was the right question to ask and secondly that the information available is adequate to answer the question. Time spent getting it all right to begin with is time saved when you get the right result first time.

Good analytics means not stopping at the retrospective stage - you need to progress to predictive analysis. Work out how to make a difference and do it.

So, the take home message? Just as in any business, if you spend some time and money to get the question right, streamline the processes (all the way from collection to analysis) and include predictions on what the analysis actually means then you're on the right track.

Also, just as in any business, not all of the knowledge in how you do this needs to reside in your own operation. If you need expert help, go out and get it - there's people out there, just like me, who have been there before in the elite environment and know how to help.

keywords; biomechanics - skill learning - augmented feedback

I recently had a great week with ASPIRE in Qatar, creating a program from scratch that integrated video feedback and force information (from two force plates). The goal of the project was to create a system that let the Strength and Conditioning team provide augmented feedback as soon as the rep was finished, helping their athletes learn the correct technique for the exercise they were doing.

It was a good technical project that took advantage of the power of the Dartfish video analysis program to display and store data along with the video, a feature of the program that is rarely used. But there were also some theoretical considerations about how augmented feedback should be used that needed to be taken into account. Just because you can create some a program that provides feedback and uses fancy measurement and visualisation tools, it doesn't mean you should.

Whether or not explicit instruction is appropriate, how soon and how often should feedback be available, how do you keep the focus of attention external, these are all questions that should be asked when you're looking at designing a system to provide augmented feedback to athletes. In all cases, as Mark Guadagnoli and Tim Lee pointed out when publishing their article on the Challenge Point Framework, you can't learn without information, and there is an ideal amount of information that is specific to the learner and the task they're doing.

In the situation we had at ASPIRE, the system has the flexibility to provide feedback on results like jump height and peak power, as well as performance measures like the profile of force application split into left and right foot or technical information from the video. This gives the coach the flexibility to provide less information to athletes who are perhaps just learning the basics of the movement, and more information to more skilled athletes when they are ready for it. Perhaps the most important aspect is that it is rapid feedback, so the information is available in time for the athlete to use it to help them learn.

keywords; biomechanics - skill learning

At some stage in every AFL season the topic of kicking accuracy comes up. It's true that getting elite athletes to improve their skills is incredibly difficult and it's something that nobody seems to really have cracked, but that's not to say there aren't a lot of opinions about how to do it. To add to the discussion, here's a few of the areas that I think are crucial to an effective kicking program.

1. Make a plan and stay the course

There's been some good commentary in recent days about consistency of message; that you should find some voices that work for you and listen to them, blocking out the external noise. It's a difficult thing to do, especially in a football club, but it really is vital.

I was chatting with an AFL player one day after some goalkicking practice and he asked me what I saw and thought of his kick. Even though it was one of the general areas the club had employed me to help with I wouldn't answer him and in fact told him that's the worst question he could ask, because he was having good success with his kicking and didn't need any other opinions in his head. Sometimes saying nothing is best, which is often a hard thing to do. If there is a technical area to work on, go through the trusted voice rather than adding another 'advisor' into the mix, they can make the call as to whether it helps or just brings confusion.

On the flipside of this, I once asked a coach what they saw after a technical session. He had some great observations, but he was taking the right approach and not telling the athlete to avoid introducing confusion. Trouble was that there was no place for those observations to go as there wasn't a clear 'lead' coach for the athlete's skill development so those insights died. Everything could be important and should be thrown in the mix, but it should be funnelled through the mentor and not go directly to the athlete.

What is really important here is that you have a plan and give it time to work. There's no quick fix, so you need time to let a new technique become your technique.

Of course, this ignores the debate between intrinsic and extrinsic feedback and assumes that extrinsic feedback is necessary, but we'll leave that for another day.

2. Choose the right technique

Not all bodies are put together in the same way, you need to choose the right technique for the athlete. Using a cookie cutter approach might work for some but on the whole if an athlete's got a technical fault they probably would have already tried a lot of the solutions that have worked for others already. It's likely they don't fit the 'normal' mould and they need something different to work for them.

3. Take every opportunity to practice and learn

I don't agree that athletes don't get enough chances to practice their skills, and the idea that load management by fitness staff means there's not enough practice time just isn't the case in my opinion. I would agree that there would be reduced time to just kick the ball one on one with coaches in a traditional 'come here sonny and let's work on your kicking' session, but that doesn't mean that there aren't enough opportunities to practice and learn. Too often you see a learning opportunity just wasted because you're on to the next thing and don't reflect on what just happened. If skills really are a priority then you need to make the most of every opportunity you get to learn something more. There still should be room for just kicking a ball around and enjoying it (in fact, this is a fantastic tool for some discovery learning), but there needs to be balance.

4. Fit the challenge of the task to the athlete

There's a fantastic scholarly journal article by Mark Guadagnoli and Tim Lee that outlines a framework of how to optimise learning by fitting the challenge of the task to the athlete. They begin by stating that learning cannot happen without information, learning will be hindered if there's too much or too little information, and that there is an optimal amount of information that is different for all athletes depending on their own level of skill.

There's a lot more to the framework than that, but if you can get these building blocks right then you're a long way down the track to an effective skill development program.

How I interpret the challenge point framework in a practical setting is that a skill like a set shot at goal has a certain amount of challenge which is consistent. However, on top of that challenge comes other factors like target size, pressure and fatigue. If you want to train for a high pressure situation without actually being in that situation then you need to adjust the skill challenge through things like reducing the target size or introducing consequences till you hit just the right amount of challenge for that athlete.

This sort of technical training is only one factor in rising to the overall challenge of the situation. There should be complimentary programs to, for instance, help an athlete cope with the pressure of game situations, or deal with the fatigue they're probably feeling when the opportunity comes in a game. We do tend to focus on the physical aspects, hoping that by making an athlete technically better there will be enough capacity to deal with any reduction in their technical ability due to pressure, but this shouldn't be the case. Performance psychology needs to be used in conjunction with an appropriately planned technical program that is targeted to the individual athlete with all the physical and technical differences they bring to the table.

There's other debates to be had like the role of augmented feedback, avoiding internal attentional focus, the value of errorless learning, and as I said earlier internal vs external feedback. It's a hugely complex area that a lot of experts can dip an oar into. But with a proper plan that is tailored to the individual needs and skill level of the athlete you're going to be well on the way to a good result.

keywords; statistics - analytics

A few weeks ago, a colleague of mine was wondering about strategies to help Major League Baseballers sleep better during the season so they aren't so fatigued when September rolls around. The question intrigued me so I did a bit of digging myself.

It's an interesting problem because even though there's no doubt the long season and constant travel takes a massive toll on the athletes, is there any evidence of an effect on performance or are they able to cope with the physical demands without compromising their game output?

I started with one basic assumption; accumulated sleep debt would affect pitchers less than outfield players as starting pitchers have more recovery time between starts. This would mean that over the course of a season, the pitchers would steadily become more dominant.

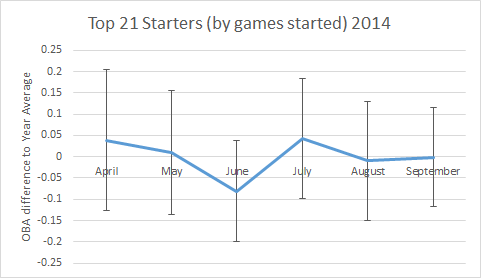

To begin with I just wanted a quick look, so I collated the monthly performance of the top 21 starters (by appearances) from 2014 from MLB.com. Now, I know the good folk of SABR would be horrified that I would use the very basic opposition batting average as my stat of choice but I just wanted a quick look and that was the rough and ready stat I chose. Here's a graph of the results (opposition batting average is expressed here as difference to the yearly average so different years can be compared later on).

The results didn't show any season long deterioration in batting average, but there was some strange variations in June/July that probably could do with some further investigation.

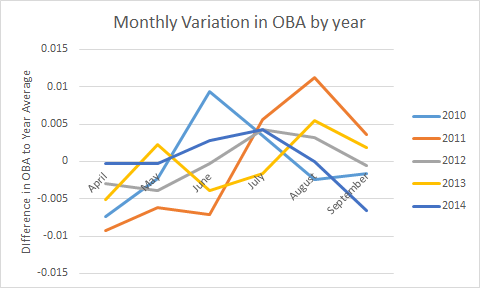

So, that would mean digging a bit deeper into the game by game stats, which I wasn't really wanting to do via the MLB.com stat page. The other option was to quickly write a program that would act as my own analysis engine and bulk process all the at bats from 2014, which is what I ended up doing. It didn't take too long in the end, the greatest challenge was probably taking detail out of the individual at bats given the huge variety in the ways a play can unfold in baseball that all have a description of some sort in the data available online. What this did allow me to do was to quickly look at more than just 2014 to see if the June/July variations were repeated across seasons.

Again, SABR folk would be shouting at their screens if they read this but opposition batting average was the stat I used as a rough look. To try and get athletes who have played the majority of the season and so would be more likely candidates for accumulated sleep debt I limited the pitchers to anyone over 200 batters faced and the batters to anyone over 100 at bats. The results showed that although most of the year appears random, the opposition batting average was consistently higher than the yearly average during July.